How the Rise of Edge Computing will Reshape the Data Center Landscape

As the connected world of the 21st century comes into existence, the next decade will give rise to new technologies like connected vehicles that will continue to transform and automate our everyday lives. To fully realize the potential of these technologies, we still need to overcome some key technical limitations. Edge computing can help.

By Siemon Interconnect Solutions edge computing

In the next decade, we will continue to see skyrocketing growth in the number of IP-connected mobile and machine-to-machine (M2M) devices, which will handle significant amounts of IP traffic. Tomorrow’s consumers will demand faster Wi-Fi service and application delivery from online providers. Also, some M2M devices, such as autonomous vehicles, will require real-time communications with local processing resources to guarantee safety.

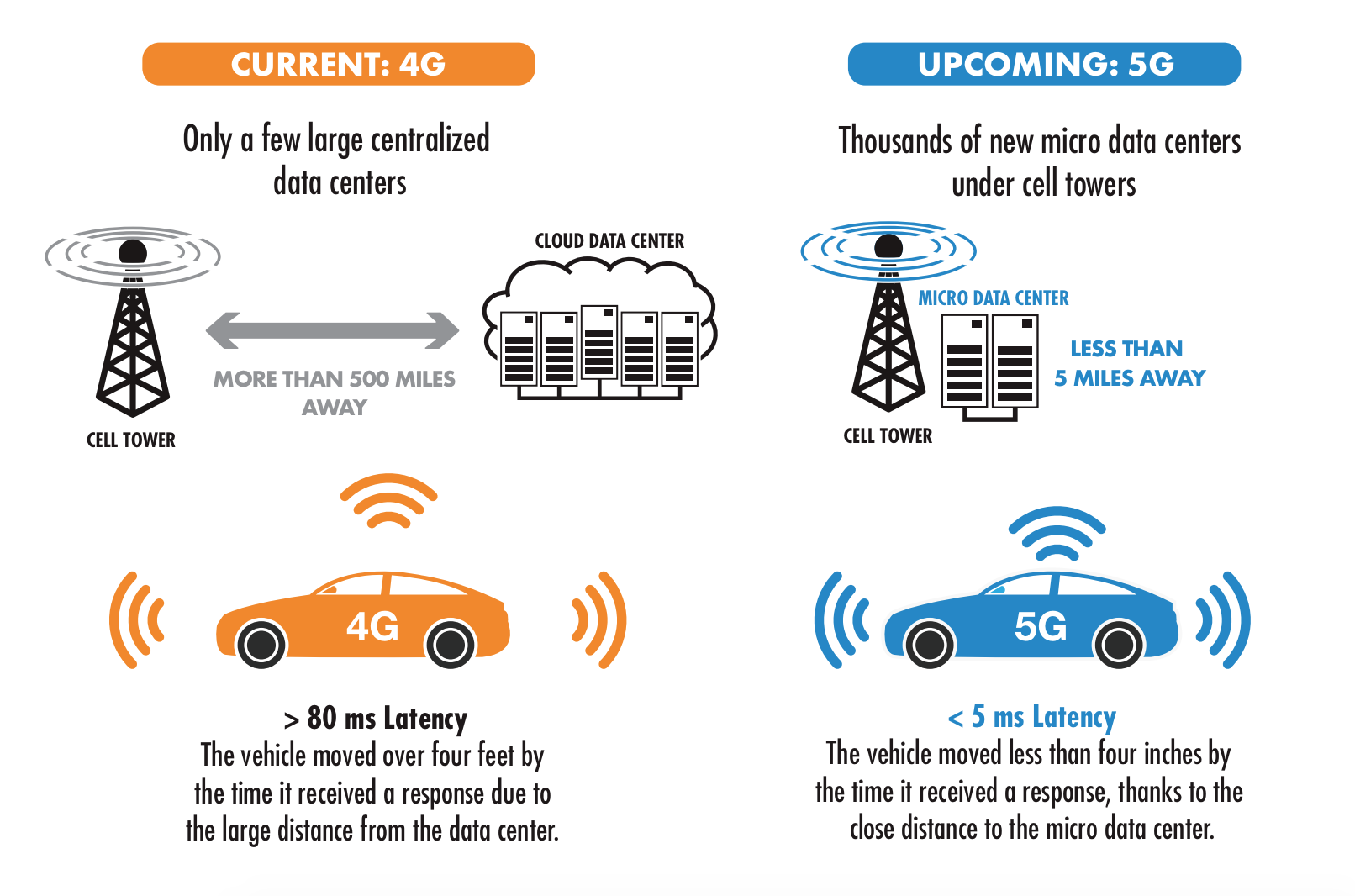

Today’s IP networks cannot handle the high-speed data transmissions that tomorrow’s connected devices will require. In a traditional IP architecture, data must often travel hundreds of miles over a network between end users or devices and cloud resources. This results in latency, or slow delivery of time-sensitive data.

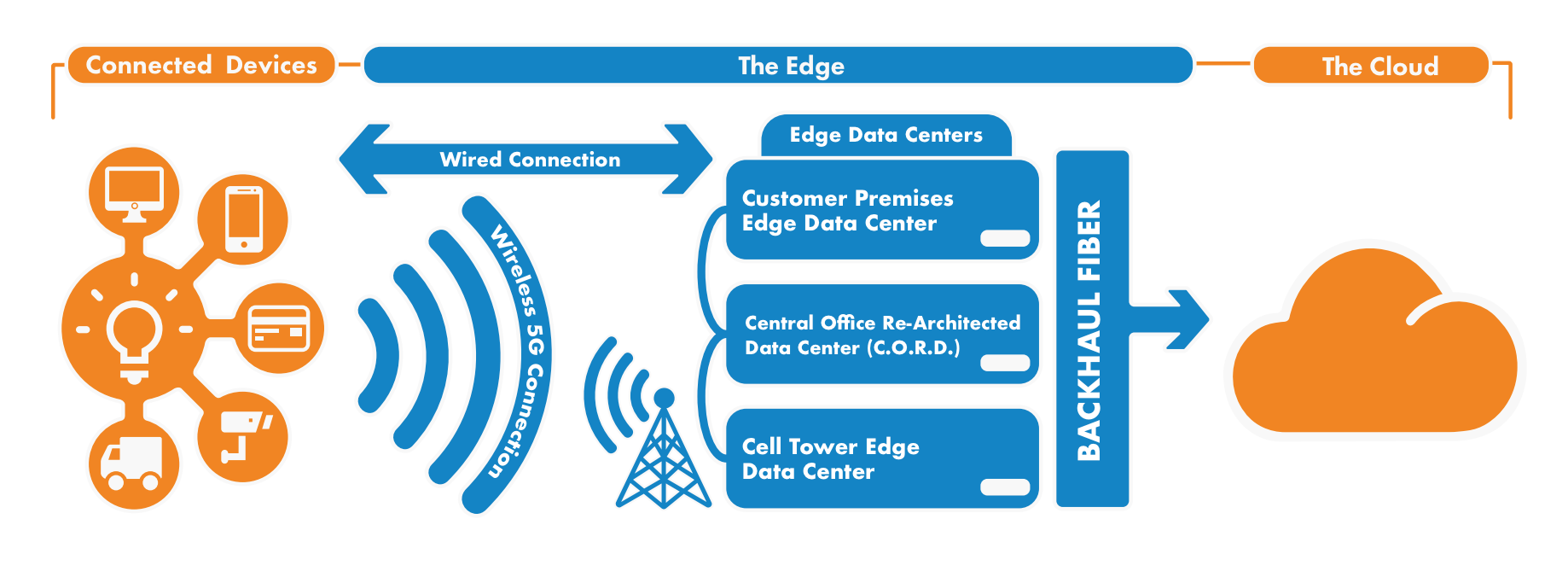

The solution to reducing latency lies in edge computing. By establishing IT deployments for cloud-based services in edge data centers in localized areas, we effectively bring IT resources closer to end users and devices. This helps us achieve efficient, high-speed delivery of applications and data. Edge data centers are typically located on the edge of a network, with connections back to a centralized cloud core.

Many new technologies will utilize and benefit from edge data centers, including fifth generation (5G) networks, Internet of things (IoT) and Industrial Internet of things (IIoT) devices, autonomous vehicles, virtual and augmented reality, artificial intelligence and machine learning, data analytics, and video streaming and surveillance. High-tech providers who invest in the deployment of edge data centers today will achieve a competitive edge, and will be able to offer faster, more reliable delivery of their services and applications. To support IoT, IIoT, and other next-generation technologies, we must expand data and network capacity, reduce latency, and achieve faster processing of data and applications.

So, what’s the solution? Instead of bringing the users and devices to the data center, we bring the power of the data center to the users and devices. Edge computing relies on a distributed data center architecture, in which IT cloud servers housed in edge data centers are deployed on the outer edges of a network. By bringing IT resources closer to the end users and/or devices they serve, we can achieve high-speed, low-latency processing of applications and data.

The Problem of Latency

Latency is caused by a number of factors, including the physical distance that data transmissions must travel between centralized cloud servers and end user devices, the number of network hops between switches that the transmission has to make, and the amount of traffic on the network. For consumers, latency is usually just an annoyance. It means a slow download of a movie or interference with the reaction time of an online video game. Occasionally, latency results in application failure due to transmission errors and the application must be reloaded.

For IoT and M2M devices, network latency can be a major impediment, especially for devices that rely on guaranteed response times and real-time processing of data and applications.

A prime example is the autonomous vehicle, which will rely on interactions between internal and external devices to ensure the safety of the vehicle’s passengers, other motorists, and pedestrians. While autonomous vehicles will be required to carry very powerful internal safety systems via a combination of onboard computers and sensors, they cannot fully perform as independent, self-operating systems. They will always need to communicate with smart traffic signals and road sensors to receive information about current traffic conditions and possible safety threats.

For example, if an autonomous vehicle is approaching a blind intersection, the vehicle’s sensors can’t “see” around the corners of nearby buildings. Instead, a smart traffic light installed above the intersection would signal the vehicle as to whether it is safe to proceed. In this scenario, it would be impractical for the smart traffic light to send data over a long-distance network, back to a centralized cloud core due to latency.

In this example, an edge data center would be installed at the blind intersection to operate the smart traffic light. It might be a quickly deployed enclosure on a nearby street corner, or even a small box at the base of the traffic light that holds a microprocessor. If sensors detect that another car is about to speed through a red light at the intersection, the servers in the edge data center would instantly process a warning application, directing the smart traffic light to signal the autonomous vehicle to activate its brakes. By reducing the distance that data must travel, you eliminate latency, allowing the application to operate in real-time to avoid a collision.

What is an Edge Data Center?

An edge data center is a self-functioning data center that holds localized IT deployments for cloud services, with compute, storage, and analytics resources for application processing and data caching. It should be noted that an edge data center qualifies as an actual data center, as opposed to being just a Wi-Fi router or point of presence on the network. An edge data center includes the same power, cooling, connectivity, and security features that you find in a centralized data center, but on a smaller scale. Also, the IT deployments in an edge data center will handle processing of applications, data analytics, and data storage within the general vicinity of end users and devices that use those applications and data.

An edge data center resides on the outer edges of an IP network. It connects back to a centralized cloud core in a data center that is usually located some distance away. Also, a group of edge data centers will often be connected to each other to form an aggregated edge or edge cloud which creates a shared pool of localized compute, storage, and network resources.

Essential Infrastructure

Edge data centers take many forms — modular, containerized, micro, warehouse, and office-based — but all edge data centers require the same infrastructure elements that you find in larger, centralized data centers. Some of the essential elements include:

- Fiber Optic Connectivity. All edge data centers will require high-density fiber optic connectivity solutions for high-speed, low latency transmissions. Fiber optic cabling provides the best option for connecting edge data centers back to cloud facilities, hyperscale data centers, and central offices to achieve speeds of 400Gb/s and beyond.

- High-Speed Copper Cabling. Edge data centers use direct-attach, high-speed copper cabling such as Category 6A to support 10Gb/s and Category 8 to support 25 and 40Gb/s, to achieve direct connections from access switches to servers in cabinets.

- Cable Management Solutions. These solutions are critical to protecting, routing, and managing cables inside any kind of edge data center. Cable management options might include cable tray systems for overhead and underfloor cable pathways, as well as horizontal and vertical cable management to protect critical copper and fiber cords.

- Automated Infrastructure Management (AIM) Tools. Many edge data centers will be unmanned or limited access sites. Service providers will require infrastructure management tools that allow them to manage edge data centers in remote locations. This might include remote management and monitoring of copper and fiber connections and security locks, with real-time alerts of network and security events (e.g., cable connects/disconnects or opening of a cabinet door) to help prevent downtime or unauthorized access. It may also include monitoring of power usage at the outlet level, as well as cabinet-level environmental monitoring via data center infrastructure management (DCIM).

- Intelligent PDUs. All edge data centers require power distribution units (PDUs) to distribute power to active equipment. Intelligent PDUs offer outlet-level power usage monitoring and switching control, sensors for cabinet-level environmental monitoring (i.e. temperature, humidity), and intuitive web interfaces that enable remote management and monitoring of PDUs in edge facilities from a centralized cloud location.

- Racks and Cabinets. Depending on their size, edge data centers may require up to 50 racks and/or cabinets with aisle containment. The best strategy is to utilize pre-configured cabinets that can be easily rolled into place inside an edge data center and installed with active IT equipment. Additionally, edge data centers will require thermal management solutions such as blanking panels and brush guards, to maintain separation of hot and cold air.

While expanding rapidly, edge computing is still in its infancy. The infrastructure elements (e.g., power, cooling, connectivity) and form factors (e.g., rugged container and micro data centers) of edge data centers are fairly well-developed, but we are just now starting to see the actual widespread deployment of distributed data center architectures to provide localized cloud resources to end users and devices. As new technologies like 5G networks, smart cities, and autonomous vehicles are further developed, they will integrate with, operate on, and be more reliant on edge computing resources.

Visit Siemon Interconnect Solutions.