SC23 Supercomputer and HPC Showcase

The Denver Convention Center hosted SC23, this year’s edition of the Supercomputer conference, with the theme of i am hpc highlighting the depth and diversity of high-performance computing. The six-day event featured 90 research papers, 51 workshops, 37 tutorials, and 15 expert panels that explored the hardware, software, and emerging applications for this amazing technology. Once considered a technologically advanced but narrow segment of the computing industry, SC23 attracted a record 14,000 attendees with industry leaders including IBM, Intel, HP, Technologies, AWS, Dell, Google, and Microsoft prominently displaying their capabilities.

One of the annual features of SC conferences is the SCinet, an extreme high-speed local area network with 300 access points created on the exposition floor using $40 million of contributed equipment. This world-record 6.71 Tb network was capable of downloading the entire library of Netflix content in one hour.

The exposition included 438 exhibitors showcasing components, software, and shared remote access to supercomputing resources. Booths representing leading colleges and universities highlighted projects that utilize their supercomputer capabilities. SC management emphasizes support of student involvement and nurtures participation with guided tours of the exhibit floor, student research posters, and a well-attended job fair. Student volunteers help set up SC23 and gained exposure to the many technical sessions.

A series of major themes were evident at SC23.

Artificial Intelligence (AI) has become a major target application for high-performance computers. Chips, accelerators, high-speed memory transfer, and servers are being customized to support large language models with accelerators becoming an enabling technology. Advanced silicon chips are being developed with integrated inference capabilities, a key attribute of AI systems.

Optimized accelerators from Nvidia were prominently displayed on the expo floor and are designed to speed the model learning phase of AI systems, a costly, and time-consuming process. Accelerators or graphic processing units (GPUs) speed processing jobs by taking some tasks off the CPU, allowing it to run faster.

Customized chips and Accelerators are so important in HPC applications that xAI introduced the GROQ chip, a purpose-built, software-driven, large language processor. Large language models work by analyzing a sequence of words, then predicting the next term in sequence. How accurate they are in predicting the next word is a critical factor in determining performance. GROQ utilizes a language processing unit accelerator and is designed for sequential processing as well as power efficiency. The Groq AI bot is said to be faster, has access to a more current database, and responds with a bit of wit.

Similar to efforts in data centers, HPS system designers are scrambling to find ways to reduce power consumed by exascale computers, which process billions of computations per second. One presentation reported multiple supercomputers consuming 40 to 50 megawatts of energy, comparable to a medium sized city. An individual server can draw 10 kW. Demands for increasing performance cannot continue to result in even higher power consumption.

Recognition of the immense carbon footprint of supercomputers has created a new criterion for system design. The HPC industry has long ranked the top 500 global supercomputers based on petaflops per second or one quadrillion (1015) floating-point operations per second. The drive for power reduction is being reflected in a new Top 500 green supercomputer ranking based on the power consumed per gigaflops per watt.

Rising power levels are also impacting the ability to keep equipment cool. The total energy consumed by a supercomputer is often split equally between computation and cooling systems.

Traditional HPC server design utilizes cooling airflow from a bank of fans. The exhibition floor featured multiple cooling systems offering greater efficiency including heat pipes, cold plate, and immersion technology.

Heat pipes are an effective method of cooling specific hot spots where circulating ambient air is insufficient to maintain safe temperatures and heat can be dissipated to a location in relatively close proximity.

Heat pipes transfer heat from one location to another by utilizing vaporizable liquid in a sealed tube as it changes into a gas, and being released when it changes back into a liquid.

Cold plates absorb heat from individual devices by circulating chilled liquid to a heat transfer plate in close contact with a processor or ASIC.

Other designs feature a sealed enclosure that allows the liquid to come in direct contact with a device.

The liquid is recycled to a nearby chiller.

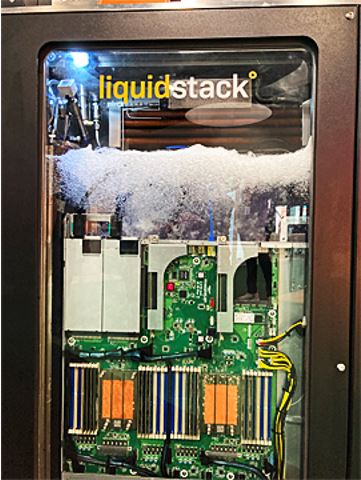

Immersion systems submerge a series of PCBs or entire rack into a tank of non-corrosive high dielectric liquid which is in direct contact with every component on the board. The boiling liquid maintains components at a constant temperature. Liquid vapors are condensed and returned to the tank in this closed-loop system.

Liquid cooling systems feature much higher cooling efficiency, reduced power consumption, are silent, and require less maintenance than transitional air cooling. Liquid cooled systems permit increased equipment density, allowing better utilization of floor space.

Liquid cooling systems will require significant changes in equipment design as well as the infrastructure of HPC installations while generating demand for no-drip blind mate liquid connectors.

Several booth representatives felt that fan cooling has reached its effective limits and conversion to liquid cooling by hyperscale data centers is inevitable.

InfiniBand continues to be widely used in supercomputer clusters due to its high reliability, low latency, and high bandwidth. PCIe is the standard interface between a server PCB and an accelerator module. The PCI-Special Interest Group (SIG) booth featured demonstrations of PCIe technology in HPC applications. The SIG also used this venue to announce the designation of PCIe internal and external cables as CopperLink cables.

Given the high cost of building and operating a supercomputer, access to HPC resources is increasingly available via subscription. A user can easily contract for a level of computing power which can scale to satisfy variable demands.

The potential of quantum computing was the subject of multiple technical sessions and the focus of several booths on the expo floor. There are at least five different forms of quantum computing with ongoing research in each, as the capabilities offered by quantum computing make mastery of this technology a national security priority. All current quantum computers require extreme cold to ensure qubit stability. Major corporations including IBM and others have begun providing access to their quantum computer by subscription. TheSC23 Quantum Village provided quantum computing companies and users an opportunity to engage with industry leaders, showcase their emerging products, tools, and services and share research on this exciting but baffling technology.

Current quantum computers make extensive use of precision coaxial connectors, but a significant market for connectors used in quantum computers is not expected to materialize for at least 10 years.

The attendees of SC conferences are more system users rather than system designers or component consumers. Booths are typically staffed by marketing and scientist personnel as opposed to design engineers. As a result, only two connector suppliers participated in SC23.

The BizLink booth featured a broad array of power, signal, and PCB connectors as well as pluggable optical transceivers.

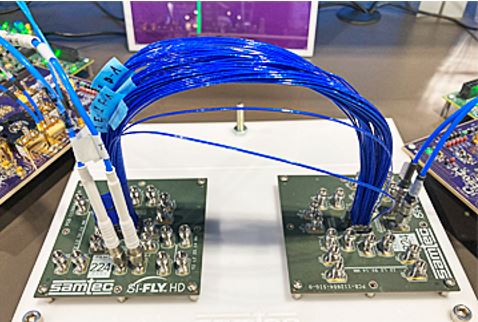

The large Samtec booth featured several live demonstrations of high-speed channels including 224Gb/s PAM4 over each of 64, 12” differential pair cables for an aggregate 6.4Tb bi-directional channel. The cables were terminated using next-generation Si-Fly™ connectors.

A second demonstration of CXL over fiber solution used PCIe 4.0 and Firefly™ transceivers to communicate over 100 meters of OM3 fiber.

A third demonstration ran 128Gb PAM4 at PCIe Gen 7 speed over 18” of NovaRay® twinaxial cable and connectors.

The advances and technical challenges discussed in research papers and on display at SC conferences are often harbingers of what can be expected to influence the broad computer industry in the future.

SC24 will be held in Atlanta, Georgia, November 17-22, 2024.

Like this article? Check out our other High-Speed articles, Mil/Aero Market articles, and our 2023 Article Archives.

Subscribe to our weekly e-newsletters, follow us on LinkedIn, Twitter, and Facebook, and check out our eBook archives for more applicable, expert-informed connectivity content.

- Optics Outpace Copper at OFC 2024 - April 16, 2024

- Digital Lighting Enhances your Theatrical Experience - March 5, 2024

- DesignCon 2024 in Review - February 13, 2024