The Numbers that Define Modern Electronics

As the data humans create and consume reaches once unimaginable volumes, a new nomenclature has been created to describe it.

Carl Sagan, the eminent American astronomer, planetary scientist, cosmologist, astrophysicist, science communicator, author, and professor, invited people to imagine the huge number of stars in our galaxy. His famous quote, “billions upon billions of stars,” was an attempt to allow ordinary citizens to get their head around an incomprehensible number.

Over the years, the bandwidth of electronic systems has increased at an incredible rate. The transfer of data at one million bits per second (megabits/s) evolved to one billion bits per second (gigabits/s). Products already on the market are rated in terabits, or one trillion bits per second (Tb/s).

Data center switches are a prime example of how advanced electronics have followed a consistent path to higher speeds. In 12 years, state-of-the-art switches have bumped up from 640 gigabits/s to 51.2 terabits/s, with 102.4 Tb/s silicon already on the roadmap.

![]()

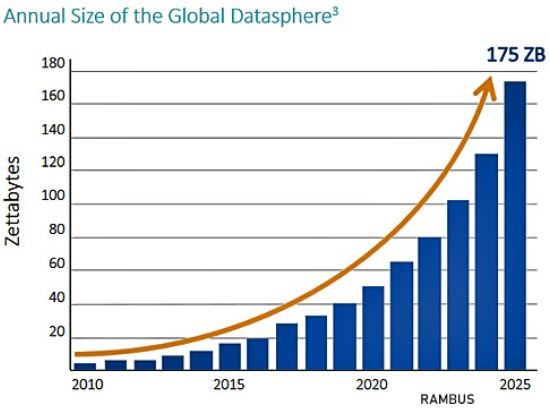

With data center bandwidth continuing to double every two years, these numbers will continue to increase, requiring new ways to express extremely large quantities. Fortunately, the International Bureau of Weights and Measures has augmented existing nomenclature to include petabytes (quadrillion), exabytes (quintillion), zettabytes, (sextillion), and yottabytes (septillion). It has been roughly estimated that there are seven quintillion, five hundred quadrillion (7.5 x 1018) grains of sand on Earth. A yottabyte is a unit of information equal to one septillion (1024) bytes. Try to visualize that number!

The necessity for these extreme numbers is already appearing in forecast data. The total volume of data created and consumed worldwide is expected to reach 175 zettabytes by 2025.

Electronic circuits and device characteristics are defined by extremely large and small quantities. Electrical characteristics such as the farad of capacitance and the henry of inductance are too large for many real-world applications. The values of these components are commonly expressed in terms of picofarads and nanohenrys.

To appreciate the performance upgrades that occur with every next-generation device, one quickly encounters this new nomenclature. For example, the time it takes for a signal to switch from one voltage level to another is known as rise time. The faster a signal can switch between states, the greater the number of bits that can be transmitted per second, improving the efficiency of a channel, but also generating more distortion. It has evolved from nanoseconds (one billionth of a second) to picoseconds (one trillionth of a second). Other phenomena, such as the speed of light (3 × 10-4 m/pico second) or movement of electrons, may be measured in femtoseconds, which are 1/1,000th of a picosecond, or one quadrillionth of a second.

The performance of current leading supercomputers is often measured in terms of petaflops, which is a unit of measure for calculating the speed of a computer equal to one quadrillion floating-point operations per second.

Latency is the delay encountered by an electronic or optical signal as it passes through a transmission system and is measured in milliseconds. Applications that require real-time monitoring such as process automation or autonomous transportation will demand extremely short latency. These numbers are important in select applications such as high-frequency automated stock trading, where operations are physically located close to a stock exchange to minimize nanosecond delay.

Areal density is a measurement of the amount of data that can be stored on a given unit of physical space on storage media and is measured in gigabits per square inch. The most advanced disk drives are approaching one terabit per square inch. The largest storage capacity of a 3.5” hard drive is currently 26 terabytes, which is a 26% increase from 2021.

Hyperscale data centers are loosely defined as incorporating more than 100,000 servers and 10,000 switches requiring $100 billion in annual capital expenses. Bandwidth growth is approaching 40% CAGR.

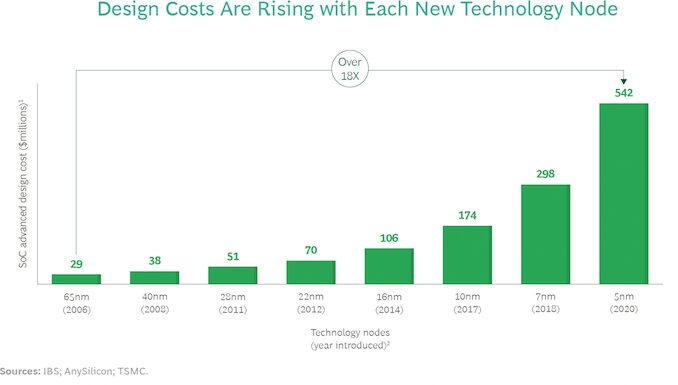

The semiconductor industry produces an incredible number of chips per year. In 2021, 1.15 trillion semiconductors were shipped. In the U.S., the 2022 Federal Chips and Science Act will pump $52.7 billion into the funding of new chip development and manufacturing. The European Chips Act will invest €43 billion (public and private funds) into chip production. Fabrication of high-end chips requires the use of both large quantities and tiny dimensions. Increasing the density of transistors on a chip increases the performance, reduces power consumption, and reduces cost. The IBM Telum chip is based on a 7 nanometer (nm) manufacturing process that creates 22.5 billion transistors on a single chip. TSMC is currently in the process of refining a 3 nm process with objectives to perfect 2 and even 1 nm devices. We may be nearing the economic limits of smaller chip geometries as the cost of development and manufacturing processes rise exponentially.

Unlike most digital standards, the Peripheral Component Interface Express (PCIe) has chosen to define performance in gigatransfers per second (GT/s) rather than gigabits per second. There is a slight difference between the two due to inclusion of encoding overhead.

Data centers currently consume 1.5% of total global energy production and up to half of that is spent in cooling. A typical data center can be measured in acres and consume up to 1,000 kWh per square meter. In spite of intense efforts to reduce the consumption of power, current servers and switches are pushing the limits of forced air cooling.

The joule is a measure of energy. Picojoules per bit (Pj/b) has become a key measure of power efficiency. A system running at 1 Tb/s with an efficiency of 1 Pj per bit will consume one watt of power. Multiply that by the thousands of servers that a typical data center contains and power consumption and resulting heat add up quickly.

Lane rate refers to the signaling rate in each lane of a high-speed data channel. It has evolved from 10 to 25, 40, 100, up to 200 Gb/s, Next-generation 800 Gb/s Ethernet, for example, can be achieved using 4 lanes at 200 Gb/s each or 8 lanes at 100 Gb/s. 1.6 Gb/s Ethernet is already on the roadmap.

Scientists recently calculated the absolute speed limit for how fast an optoelectronic channel can perform as one petahertz, which is one million gigahertz/second.

The quest to express ever-larger and smaller quantities continues. In late November 2022, several new prefixes were introduced. The number 10²⁷ is now officially known as ronna, and 10³° is now quetta. There’s also the sagan — a unit of measurement created to honor Sagan that defines four billion — two billion plus two billion, or the smallest number that could equal “billions and billions.”

Learn more about the development of the technologies that make up our world in Bob Hult’s Tech Trends Series.

Like this article? Check out our other new technology and data center articles, our Medical Market Page, and our 2023 and 2022 Article Archive.

Subscribe to our weekly e-newsletters, follow us on LinkedIn, Twitter, and Facebook, and check out our eBook archives for more applicable, expert-informed connectivity content.

- Optics Outpace Copper at OFC 2024 - April 16, 2024

- Digital Lighting Enhances your Theatrical Experience - March 5, 2024

- DesignCon 2024 in Review - February 13, 2024